Tacotron2TTSBundle¶

- class torchaudio.pipelines.Tacotron2TTSBundle[源]¶

資料類,捆綁使用預訓練 Tacotron2 和聲碼器所需的相關資訊。

此類提供了例項化預訓練模型的介面,以及檢索預訓練權重和與模型一起使用的額外資料所需的資訊。

Torchaudio 庫會例項化此類的物件,每個物件代表一個不同的預訓練模型。客戶端程式碼應透過這些例項訪問預訓練模型。

請參閱下方以瞭解用法和可用值。

- 示例 - 基於字元的 Tacotron2 和 WaveRNN TTS 流水線

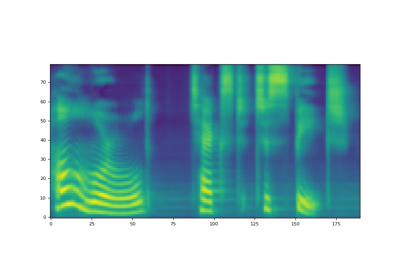

>>> import torchaudio >>> >>> text = "Hello, T T S !" >>> bundle = torchaudio.pipelines.TACOTRON2_WAVERNN_CHAR_LJSPEECH >>> >>> # Build processor, Tacotron2 and WaveRNN model >>> processor = bundle.get_text_processor() >>> tacotron2 = bundle.get_tacotron2() Downloading: 100%|███████████████████████████████| 107M/107M [00:01<00:00, 87.9MB/s] >>> vocoder = bundle.get_vocoder() Downloading: 100%|███████████████████████████████| 16.7M/16.7M [00:00<00:00, 78.1MB/s] >>> >>> # Encode text >>> input, lengths = processor(text) >>> >>> # Generate (mel-scale) spectrogram >>> specgram, lengths, _ = tacotron2.infer(input, lengths) >>> >>> # Convert spectrogram to waveform >>> waveforms, lengths = vocoder(specgram, lengths) >>> >>> torchaudio.save('hello-tts.wav', waveforms, vocoder.sample_rate)

- 示例 - 基於音素的 Tacotron2 和 WaveRNN TTS 流水線

>>> >>> # Note: >>> # This bundle uses pre-trained DeepPhonemizer as >>> # the text pre-processor. >>> # Please install deep-phonemizer. >>> # See https://github.com/as-ideas/DeepPhonemizer >>> # The pretrained weight is automatically downloaded. >>> >>> import torchaudio >>> >>> text = "Hello, TTS!" >>> bundle = torchaudio.pipelines.TACOTRON2_WAVERNN_PHONE_LJSPEECH >>> >>> # Build processor, Tacotron2 and WaveRNN model >>> processor = bundle.get_text_processor() Downloading: 100%|███████████████████████████████| 63.6M/63.6M [00:04<00:00, 15.3MB/s] >>> tacotron2 = bundle.get_tacotron2() Downloading: 100%|███████████████████████████████| 107M/107M [00:01<00:00, 87.9MB/s] >>> vocoder = bundle.get_vocoder() Downloading: 100%|███████████████████████████████| 16.7M/16.7M [00:00<00:00, 78.1MB/s] >>> >>> # Encode text >>> input, lengths = processor(text) >>> >>> # Generate (mel-scale) spectrogram >>> specgram, lengths, _ = tacotron2.infer(input, lengths) >>> >>> # Convert spectrogram to waveform >>> waveforms, lengths = vocoder(specgram, lengths) >>> >>> torchaudio.save('hello-tts.wav', waveforms, vocoder.sample_rate)

- 使用

Tacotron2TTSBundle的教程

方法¶

get_tacotron2¶

- abstract Tacotron2TTSBundle.get_tacotron2(*, dl_kwargs=None) Tacotron2[源]¶

建立帶有預訓練權重的 Tacotron2 模型。

- 引數:

dl_kwargs (關鍵字引數字典) – 傳遞給

torch.hub.load_state_dict_from_url()。- 返回:

生成的模型。

- 返回型別:

get_text_processor¶

- abstract Tacotron2TTSBundle.get_text_processor(*, dl_kwargs=None) TextProcessor[源]¶

建立一個文字處理器

對於基於字元的流水線,此處理器按字元分割輸入文字。對於基於音素的流水線,此處理器將輸入文字(字素)轉換為音素。

如果需要預訓練權重檔案,則使用

torch.hub.download_url_to_file()進行下載。- 引數:

dl_kwargs (關鍵字引數字典) – 傳遞給

torch.hub.download_url_to_file()。- 返回:

一個可呼叫物件,接受字串或字串列表作為輸入,並返回編碼文字的 Tensor 和有效長度的 Tensor。該物件還具有

tokens屬性,允許恢復分詞後的形式。- 返回型別:

- 示例 - 基於字元

>>> text = [ >>> "Hello World!", >>> "Text-to-speech!", >>> ] >>> bundle = torchaudio.pipelines.TACOTRON2_WAVERNN_CHAR_LJSPEECH >>> processor = bundle.get_text_processor() >>> input, lengths = processor(text) >>> >>> print(input) tensor([[19, 16, 23, 23, 26, 11, 34, 26, 29, 23, 15, 2, 0, 0, 0], [31, 16, 35, 31, 1, 31, 26, 1, 30, 27, 16, 16, 14, 19, 2]], dtype=torch.int32) >>> >>> print(lengths) tensor([12, 15], dtype=torch.int32) >>> >>> print([processor.tokens[i] for i in input[0, :lengths[0]]]) ['h', 'e', 'l', 'l', 'o', ' ', 'w', 'o', 'r', 'l', 'd', '!'] >>> print([processor.tokens[i] for i in input[1, :lengths[1]]]) ['t', 'e', 'x', 't', '-', 't', 'o', '-', 's', 'p', 'e', 'e', 'c', 'h', '!']

- 示例 - 基於音素

>>> text = [ >>> "Hello, T T S !", >>> "Text-to-speech!", >>> ] >>> bundle = torchaudio.pipelines.TACOTRON2_WAVERNN_PHONE_LJSPEECH >>> processor = bundle.get_text_processor() Downloading: 100%|███████████████████████████████| 63.6M/63.6M [00:04<00:00, 15.3MB/s] >>> input, lengths = processor(text) >>> >>> print(input) tensor([[54, 20, 65, 69, 11, 92, 44, 65, 38, 2, 0, 0, 0, 0], [81, 40, 64, 79, 81, 1, 81, 20, 1, 79, 77, 59, 37, 2]], dtype=torch.int32) >>> >>> print(lengths) tensor([10, 14], dtype=torch.int32) >>> >>> print([processor.tokens[i] for i in input[0]]) ['HH', 'AH', 'L', 'OW', ' ', 'W', 'ER', 'L', 'D', '!', '_', '_', '_', '_'] >>> print([processor.tokens[i] for i in input[1]]) ['T', 'EH', 'K', 'S', 'T', '-', 'T', 'AH', '-', 'S', 'P', 'IY', 'CH', '!']

get_vocoder¶

- abstract Tacotron2TTSBundle.get_vocoder(*, dl_kwargs=None) Vocoder[源]¶

建立一個聲碼器模組,基於 WaveRNN 或 GriffinLim。

如果需要預訓練權重檔案,則使用

torch.hub.load_state_dict_from_url()進行下載。- 引數:

dl_kwargs (關鍵字引數字典) – 傳遞給

torch.hub.load_state_dict_from_url()。- 返回:

一個聲碼器模組,接受頻譜圖 Tensor 和可選的長度 Tensor,然後返回結果波形 Tensor 和可選的長度 Tensor。

- 返回型別: